Project 3: Scene Completion

Due: Fri, Nov 13 (11:59 PM)

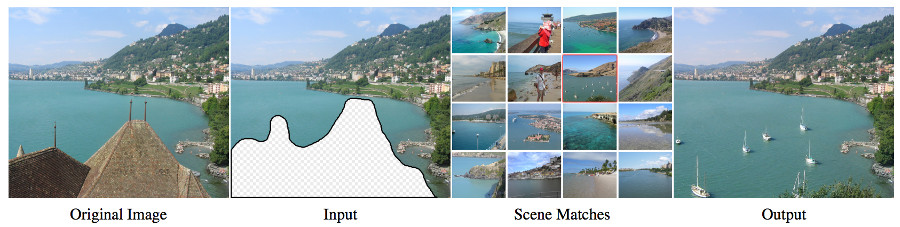

This project involves implementing a simplified version of the paper Scene Completion using Millions of Images.

Overview

The paper aims to fill in a selected "hole" region of an input image by using a large dataset of example images as a reference.

Dataset

Instead of using millions of images, we suggest to start with a small dataset of relevant images, such as the images provided by some students who implemented the assignment. If the rest of the asignment is implemented correctly this will get you a low "A" grade. To get full credit though you should explore downloading at least 1,000 to 10,000 images. This can be done through the APIs of Bing Image search, Flickr API, or scene databases in computer vision.

Your Tasks

Your program will take as input:

- An input image containing an object that is to be removed.

- A mask image containing white color if the pixel is in the hole region to be removed, or otherwise black color.

- A database of candidate background images to draw image contents from, when filling the hole.

- Generally, these should all be resized to have the same size along the longest axis.

Your tasks are to:

- Implement matching based on GIST descriptor. For this, you can use existing implementations of the GIST descriptor (Python, MATLAB). You should sort all database photographs based on their similarity in both GIST descriptor and low-resolution color thumbnail to the input image, in the same manner as the scene completion paper. In this way, only the top photographs need to be considered for further processing (for example, the top 10 matches).

- For each candidate database match, search over a range of translations and scales. The database image content can be translated or scaled prior to being composited on top of the input image. The scene completion paper discusses several energy terms that can be used to identify the quality of each possible translation/scale. The composited final result should only be computed for the best possible translation and scale. The following two subsequent stages now assume that the background image has been appropriately translated and scaled.

- Use a minimum cut library to extract an optimal seam between the background and foreground where the cut should be placed. You can use graph cuts libraries for Python (see method cut_simple_vh), or MATLAB. The data cost can be set up as in equation (2) of the scene completion paper, and the smoothness cost can be set up as described in the following two paragraphs.

- Finally, for each matched database image, composite the database image region on top of the input image. The region should be that which was found by the minimum cut. Unlike the scene completion paper you do not need to do Poisson blending, which adjusts the colors to be more compatible (although I will give a few extra points if you do implement Poisson blending).

What to submit:

- Your code

- Result images

- A brief writeup which discusses the results

Policies

Feel free to collaborate on solving the problem but write and submit your own individually written code.

Submission

Submit your assignment in a zip file named yourname_project3.zip.

Finally submit your zip to UVA Collab.