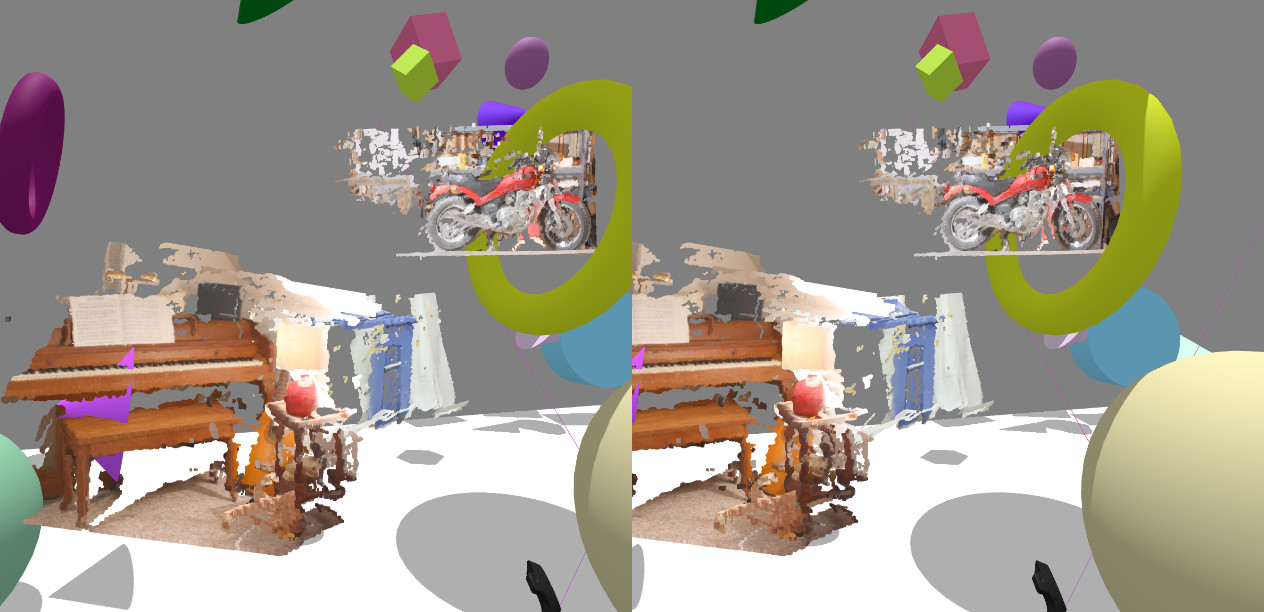

A motorcycle driving through a torus, next to a piano, a recycle bin, spheres, cubes, and tori. Imagery from Middlebury dataset.

| Day | Time |

| Sunday | 9:00 to 22:00 (shared with CS 4720 and CS 4730 from 13:00 to 21:00) |

| Monday | 19:00 to 23:00 (not formally reserved but have permission from Mark Sheriff to join CS 4720/4730's session from 19:00 to 22:30) |

| Tuesday | 16:00 to 23:00 (during 19:00 to 20:00, not formally reserved but have permission from Mark Sheriff to join CS CS 4730's session) |

| Wednesday | 13:30 to 15:30, 19:00 to 22:00 |

| Thursday | 19:30 to 22:00 |

| Friday | 18:00 to 22:00 |

| Saturday | 9:00 to 22:00 |

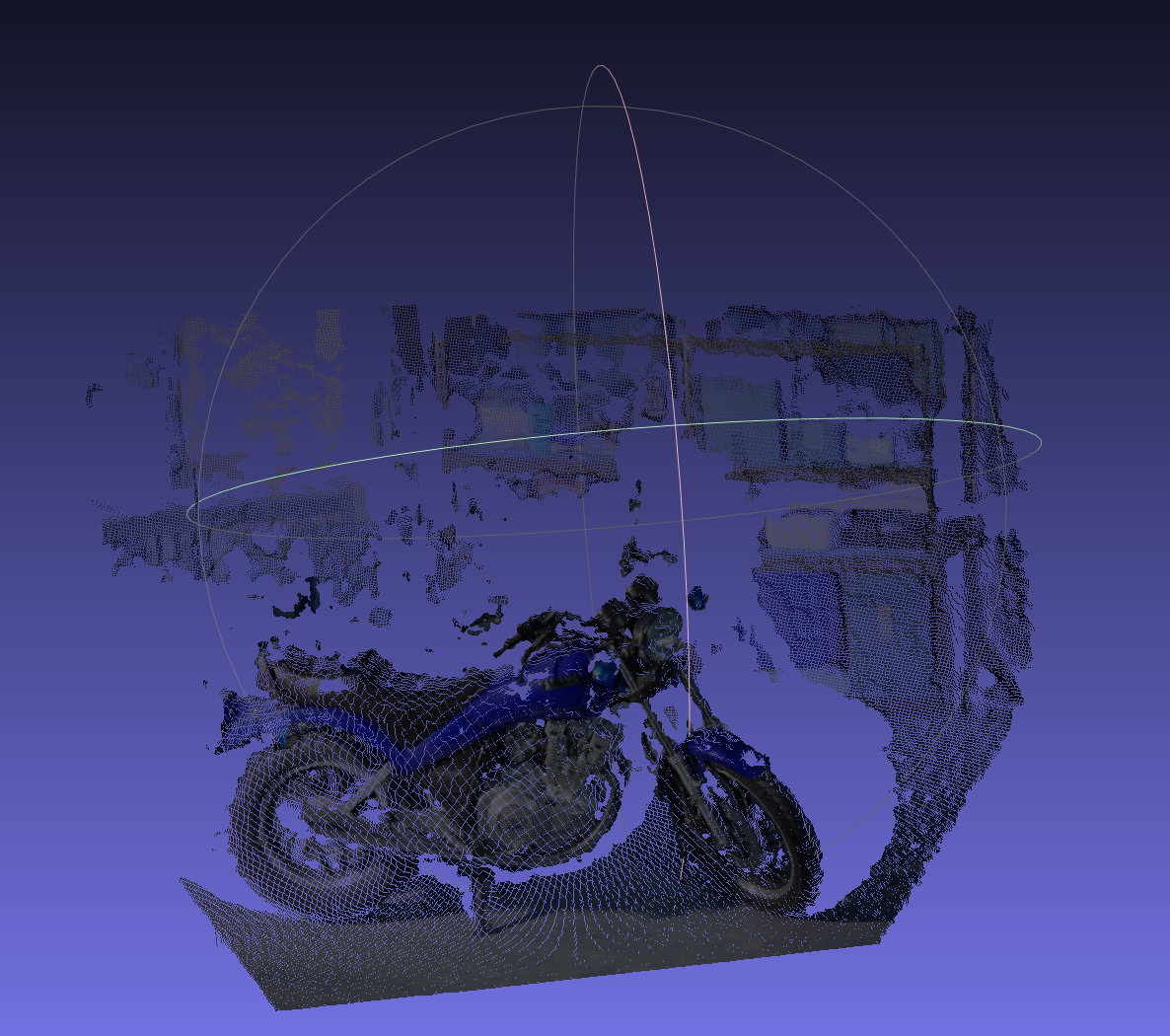

uniquenessRatio and blockSize parameters until more outliers are rejected and the produced meshes seem more reasonable; (2) Call cv2.pyrDown() twice on each input image to avoid excessive mesh resolution (which slows down the VR experience later). (3) In the write_ply() function, the colors array encodes the RGB colors of the produced points. However, due to an apparent bug in one of the converters or loaders later on, the red and blue channels end up being swapped when the model is displayed in the viewer. A proper fix would be to locate and fix this bug, but an easy hack to fix this is to simply swap the RGB color order to BGR in the colors array before saving (either fix is acceptable for this project).

pcl_ply2pcd from the Point Cloud Library (for Mac, see also Homebrew install instructions). Make this conversion to .pcd.three.js/examples/webgl_loader_pcd.html shows a point cloud in Firefox. Modify the example to instead load one of your exported .pcd files.three.js/examples/webvr*.html.three.js/examples/webvr_vive_dragging.html. You can do the coding for this step on any machine of your choice and without a VR headset: in the next bullet you can deploy it to the VR hardware. A few points your program should take care of: (1) The loader's .load method calls an anonymous function on a mesh. In that function I suggest to use THREE.GeometryUtils.center( mesh.geometry ) to center the mesh's geometry (to prevent it from being far off-camera). (2) In the loader, I suggest to use a small scale factor (see the file webvr_vive_dragging.html for how to set scale factor). (3) Decrease the number of spheres, tori, and cubes in the scene so you will be able to grab your point clouds more easily using the controller. (4) The object intersection is not very efficient since it simply loops through all primitives (e.g. points, triangles, etc) in the scene rather than using a bounding volume hierarchy such as an octree to accelerate ray-object intersections. If the point clouds do not contain too many points (e.g. the .pcd files are less than the 4 MB suggested before), then a simple workaround is to simply perform intersection calls when there are spare CPU cycles rather than on each rendered frame. This can be done by simplying move the IntersectObject calls from render() to animate().three.js/examples/webvr*.html) before trying your code. (4) There is a bug in the three.js ray intersector, so that the point cloud objects are "bigger" than they should be for intersection purposes. One solution is to simply put the controller as close as possible to the object you want to move before clicking the trigger to move it.yourname_project2.zip. Please include your source code for both of part 1 and part2, as stereo_match.py, the .ply and .pcd files you reconstructed, your final VR viewer HTML code (based on modifying webvr_vive_dragging.html), and your 2+ screenshots and selfie. Please only submit these files: please do not zip up the entire 1 GB three.js repository.