Project 3: Neural Networks

Due: Thurs, April 6 (11:59 PM)

For this assignment, we suggest to use Keras for Python. See also some notes about Keras installation and available compute facilities. This assignment is designed to be able to be completed using only CPU, but if you have a GPU, you can also install Keras with GPU capabilities (e.g. CUDA for the backend).

Assignment Overview

Your goal for this assignment is to experiment with some different fully connected and CNN architectures for image classification problems, including training one's own networks from scratch and fine-tuning an existing ImageNet-trained model.

Please answer all questions (indicated with Q #: in bold on this webpage) in your readme, organized by question number.

Keras Documentation

Keras has quite helpful documentation. For this project, you may want to look at the core layers (Dense: a dense fully-connected layer, ...), the convolutional layers (Conv2D, ...), the model class (which has methods such as evaluate, which evaluates the loss and summary metrics for the model, and predict, which generates test time predictions from the model), and the sequential class (which has some additional methods such as predict_classes, which generates class predictions), and loading and saving of models. You may also find this example of prediction with ResNet-50 to be useful (see the heading in the previous link titled "Classify ImageNet classes with ResNet50," which includes code to resize the image before running prediction).

Training Times

On my laptop it took about ten to twenty minutes to train each model for a few epochs. However, slower computers may run training more slowly. So you may want to plan ahead especially if your computer is slow to avoid running into some training time bottleneck at the 11th hour.

I also highly recommend that while debugging your program, you make the program run more quickly by lowering the number of training samples / epochs / and/or saving your model after training (followed by re-loading it on future runs of the program). This will avoid you having to wait for a long time while training the model, only to discover that you have some minor typo later in your program (which triggers e.g. a run-time exception in Python).

Image Classification

Part A: MNIST with a side of MLP (40 points)

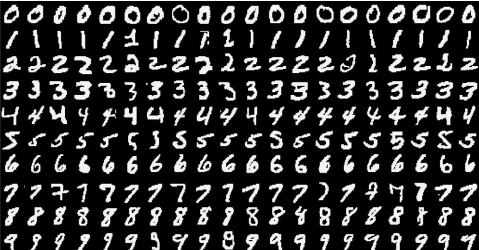

The MNIST handwritten digit recognition dataset contains images of numerical digits 0-9, which have been labeled by the corresponding labels 0-9. These can therefore be used for supervised training.

Example digits from the MNIST dataset.

Experiment with classifying digits from MNIST using fully-connected multilayer perceptrons (MLPs). You can start from the mnist_mlp.py example code in Keras.

- (8 points). Try removing all hidden layers, so there is only a 10 neuron softmax output. For this project, you can also remove the dropout layers.

Q #1: What is the test set accuracy?

- (15 points). It seems that Keras does not have a builtin function for computing confusion matrices. Write a function that gets a test time confusion matrix given a Keras model, and test input/output values.

Note that you can call the confusion matrix function available in scikit-learn if you like. However, it expects its input to be a 1D array containing integers indicating the class of each exemplar digit, whereas the supervised test values for Keras are 2D arrays such that A[i][j] indicates the probability that exemplar digit i is j. So you will have to convert between these formats. Since the data is not balanced, we also suggest to row-normalize the confusion matrix so each row sums to 1.

Q #2: What is the confusion matrix after normalization?

Q #3: Also, which digits have the highest and lowest entries along the diagonal?

Q #4: For the worst performing digit, which other digit is it most often confused with?

Q #5: What might be the explanation for the digit that performs best and worst?

- (7 points). Add two hidden fully connected layers that each have a small number of neurons, say 16 each.

Q #6: What is the test accuracy of this network?

- (10 points). Increase the number of neurons in the hidden fully connected layers to a larger number of neurons, say 256 each.

Q #7: What is the test accuracy of this network?

Q #8: What is the new confusion matrix?

Q #9: Which of the above three networks performed best at test time, and why?

Q #10: If you change the loss from cross entropy to L2 (in Keras this is called 'mean_squared_error'), is the result better or worse?

- You can turn in a single copy of your MLP code after you have finished modifying it for question 4.

Part B: MNIST garnished with a CNN (24 points)

Experiment with the MNIST dataset using different CNN classifiers. You can start with the mnist_cnn.py example in Keras.

- (24 points, 8 per architecture). Compare the following three architectures:

(A) a 3x3 convolution layer with 4 convolutions followed by a softmax,

(B) a 3x3 convolution with 32 convolutions followed by a softmax,

(C) a 3x3 convolution layer with 32 convolutions followed by a 2x2 max pool followed by softmax.

Q #11: What are the test accuracies and training times for the above three classifiers?

Part C: Finely-tuned Cats and Dogs (36 points)

In this part of the assignment, you will experiment with fine-tuning an existing ImageNet-trained CNN for a problem of dog vs cat classification. This task is fairly simple for the CNN, since it has already been trained on different varieties of dogs and cats, and the CNN just has to find out how to combine this existing knowledge.

- Download and extract the cat and dog images. We are going to apply a very simple fine tuning of the VGG-16 model, which has been pretrained on ImageNet. In particular, we will remove the fully-connected layers from VGG-16, and add a single sigmoid neuron (so the output if closer to 0 will be cat, and if closer to 1 will be considered dog), then freeze the main VGG-16 network, and modify the weights only for the sigmoid neuron.

- (12 points). You can start with this fine-tuning code, which was modified slightly from generic fine-tuning code from the Keras project. Note: check your Keras version and if it is 2.0, then make the modifications noted on the last lines of the above file. The changes you will need are to point it to your train and test data, and to enter the right number of layers to freeze for

NUM_LAYERS. Hint: You can print the layers (model.layers) and figure out which layers should be frozen (or you can use pprint.pprint(), the Python "pretty printer," which prints this more nicely).

Q #12: For debugging purposes, you can lower the number of training and validation samples by a factor of say 20, and run for 1 epoch (Note: if you make the number of training samples too small, it triggers some internal error in Keras). What accuracy do you obtain, and why?

- (12 points). Modify the code to also save the model, and make a separate Python "classification testing utility" that loads in the model and tests it on an input image of your choice. Try it out on a few cat and dog images from the test set (or images of your own choice).

- (12 points). Fine-tune on the original number of training and validation samples.

Q #13: If you fine-tune for 1 or 2 epochs using the original number of training and validation samples, what accuracy do you obtain, and why? Does your saved model file now work better with your testing utility?

Policies

Feel free to collaborate on solving the problem but write your code individually.

Submission

Submit your assignment in a zip file named yourname_project3.zip. Please include your source code for each of the three parts, and your image "classification testing utility." Please also include a readme with answers to the 13 questions above.

Finally submit your zip to UVA Collab.