Abstract

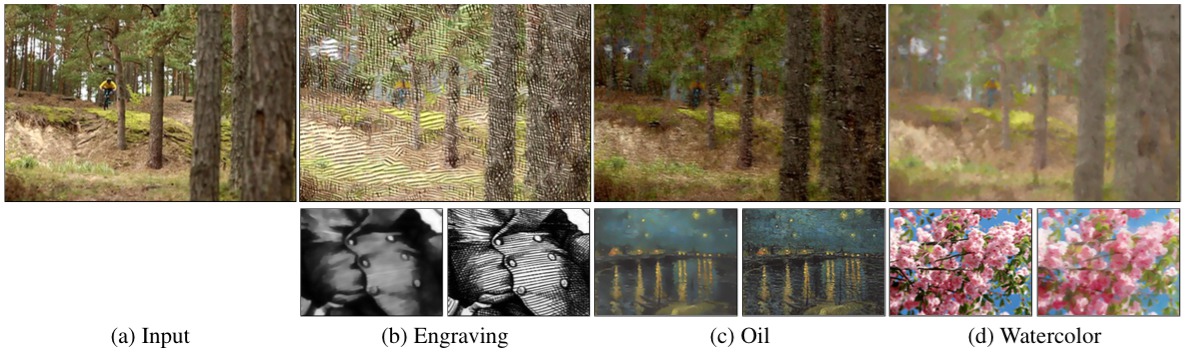

This paper presents a data structure that reduces approximate nearest neighbor query times for image patches in large datasets. Previous work in texture synthesis has demonstrated real-time synthesis from small exemplar textures. However, high performance has proved elusive for modern patch-based optimization techniques which frequently use many exemplar images in the tens of megapixels or above. Our new algorithm, PatchTable, offloads as much of the computation as possible to a pre-computation stage that takes modest time, so patch queries can be as efficient as possible. There are three key insights behind our algorithm: (1) a lookup table similar to locality sensitive hashing can be precomputed, and used to seed sufficiently good initial patch correspondences during querying, (2) missing entries in the table can be filled during pre-computation with our fast Voronoi transform, and (3) the initially seeded correspondences can be improved with a precomputed k-nearest neighbors mapping. We show experimentally that this accelerates the patch query operation by up to 9x over k-coherence, up to 12x over TreeCANN, and up to 200x over PatchMatch. Our fast algorithm allows us to explore efficient and practical imaging and computational photography applications. We show results for artistic video stylization, light field super-resolution, and multi-image inpainting.

Links